Quality Assurance MapTrip GPS-Navigation

The MapTrip GPS navigation software was first launched in 2005. It has since proven its high quality in hundreds of thousands of devices ranging from consumer products, freight forwarding trucks to police cars and fire fighting vehicles. This article describes how this high level of quality was achieved and what is done to maintain it.

Professional software you can rely on

From the beginning MapTrip has been designed for use in a professional environment. Unlike in the consumer domain, in the professional environment a navigation software is not merely a nice-to-have gadget but instead an indispensable mission critical tool. The navigation software must therefore be absolutely reliable. This is the challenge MapTrip has faced from the very beginning.

Our navigation software is being used by customers on a wide variety of hardware devices. MapTrip must run on every hardware from very economical devices with very limited hardware resources, sturdy purpose-built professional hardware to high end consumer smartphones. Most of the time MapTrip does not run alone on the device, but has to share the available resources with another application. Usually both applications communicate with one another via MapTrip’s interface. This adds another degree of complexity.

This is a challenging environment, in which MapTrip has evolved for more than a decade. It is a seasoned well proven piece of professional software that you can rely on.

Software Development Process

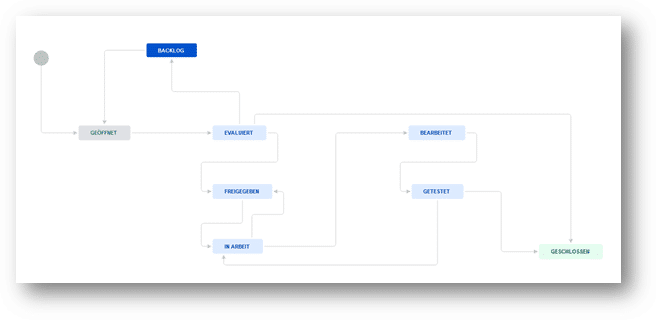

MapTrip’s quality is built into the system by design. infoware’s development process follows an eight-step workflow. It can be summarized as follows:

The development of each new feature begins with a careful planning process. The desired functionality is being described and analyzed for potential impact on the system and its stability and maintainability.

Once a desired new feature is specified, it must be evaluated by the development team. The evaluation focuses, among other things, on a risk assessment to understand the development’s impact on complexity and stability of the navigation software and adjacent systems.

Next, if the task is being released, the development itself can begin. Every night every developer’s code is automatically checked in, backed up and tested for errors. Errors are thus being detected almost immediately and can be fixed the next morning.

When a developer has finished his task, the new code is submitted for peer review. After successful review the new feature is then passed on to the testing department.

A manual test is then performed to check if the specified functionality has been achieved. These tests are performed both in a simulated stationary environment as well as in actual driving conditions.

A newly developed feature is only tagged as complete after it has passed these tests.

Automated testing and deployment

During the development process, each developer’s code is being automatically checked in a nightly testing procedure which includes over 100 individual tests. Errors can thus be identified and fixed immediately by the respective developer.

The development of new features is always preceded by the development of the corresponding automated tests. The suite of tests and their coverage therefore grows with the software.

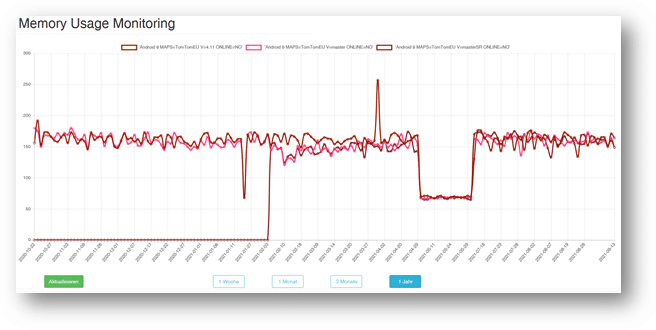

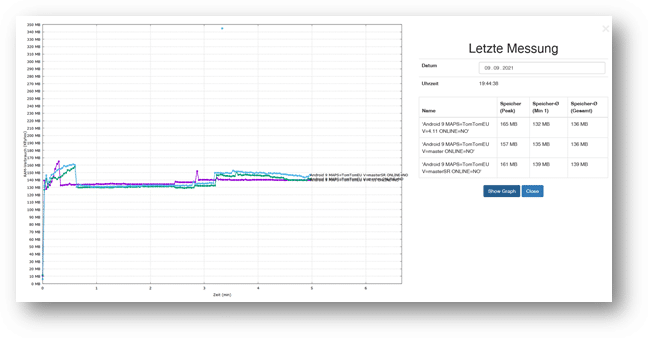

The memory consumption of the MapTrip navigation software is automatically checked. It is an important indicator for the software quality and its stability.

This graph shows memory usage during the routing process and time passed until the new route is available.

A Culture of Quality - Testing Friday

There is no such thing as bug free software. The best any software developer can do, is to follow a development process which minimizes the probability of bugs being created in the first place, making it easy to identify and fix them and to maintain the quality of the code to make it future proof.

For our MapTrip navigation software we use Jira as a bug tracking tool and to manage our development tasks. Regularly the list of tasks is being reviewed and prioritized to guide our developers’ resources.

To make room for such tasks in a busy environment, we have introduced what we call the “Testing Friday”. At infoware Fridays are reserved for improving the testing coverage, refactoring parts of the software – and for fixing bugs.

Monitoring the software’s performance after deployment

Even after the rigorous testing procedure and the release of the software, the performance of selected MapTrip installations is being monitored.

- Accuracy of drive time calculation

- Performance of map matching which can lead to rerouting

- The amount of data being transmitted

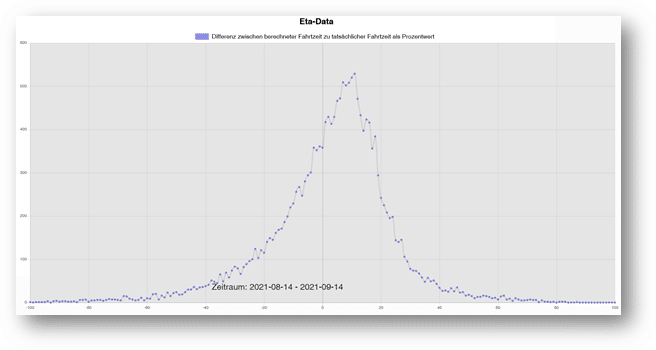

For selected devices the accuracy of the ETA calculation is being monitored. Thus potential issues can be identified before they become actual problems.

Selected MapTrip installations are using Google Firebase to report on problems the software has encountered in the field. These remote diagnostics allow us, to identify potential issues before they become actual problems.

Testing on the road

While we continuously strive to improve the coverage of our automated tests, nothing can replace real testing on the road. GPS-navigation software is extremely complex with underlying road maps that do not match reality 100%, GPS-sensors that always have a margin of error, operating systems that change from version to version, hardware devices with a mind of their own and mobile networks that don’t always provide optimal connectivity to the servers.

This multitude of environmental influences can not be simulated. Therefore, driving on the road is an integral part of our testing routine. Our testing van is a fully operational office. While the driver operates the vehicle, the test engineer is in the back, following predefined test scripts, documenting results or sometimes following a hunch, always trying to bring the software to its limit.